Because central logging is so awesome and widely used in the Linux/Unix world, I want to show you a way how you can also gather Windows Event Logs through the good old Syslog Server.

- On the server side, its quite simple: Use the plain vanilla Syslog or use something with Syslog capabilities (e.g. Rsyslog or even better Splunk).

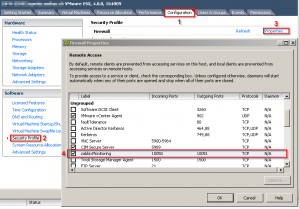

- On your Windows System, get eventlog-to-syslog (http://code.google.com/p/eventlog-to-syslog), put the two program files in C:\Windows\System32 and install it as a service as described below:

C:\Users\administrator>evtsys -i -h <SYSLOGHOST> Checking ignore file... Aug 23 20:27:25 HOSTNAME Error opening file: evtsys.cfg: The system cannot find the file specified. Aug 23 20:27:25 HOSTNAME Creating file with filename: evtsys.cfg Command completed successfully C:\Users\administrator>net start evtsys The Eventlog to Syslog service is starting. The Eventlog to Syslog service was started successfully.

Here are the options for eventlog-to-syslog:

Version: 4.4 (32-bit)

Usage: evtsys -i|-u|-d [-h host] [-b host] [-f facility] [-p port]

[-t tag] [-s minutes] [-l level] [-n]

-i Install service

-u Uninstall service

-d Debug: run as console program

-h host Name of log host

-b host Name of secondary log host

-f facility Facility level of syslog message

-l level Minimum level to send to syslog.

0=All/Verbose, 1=Critical, 2=Error, 3=Warning, 4=Info

-n Include only those events specified in the config file.

-p port Port number of syslogd

-q bool Query the Dhcp server to obtain the syslog/port to log to

(0/1 = disable/enable)

-t tag Include tag as program field in syslog message.

-s minutes Optional interval between status messages. 0 = Disabled

Default port: 514

Default facility: daemon

Default status interval: 0

Host (-h) required if installing.